There’s no denying that artificial intelligence (AI) is having a tremendous impact on the legal industry. With over one in five lawyers already using AI in their practices according to the Legal Trends Report, it’s safe to say that AI is here to stay. However, the enthusiastic adoption of AI in the legal industry has not come without potential AI legal issues. We’ve all heard the stories about lawyers citing fake, AI-generated cases in briefs and the consequences arising from their oversight.

More recently, there’s been concern over the consequences facing law firms who signed onto Microsoft’s Azure OpenAI Service, which provides access to OpenAI’s AI models via the Azure Cloud. More than a year after signing on, many law firms became aware of a term of use stating that Microsoft was entitled to retain and manually review certain user prompts. While this term of use might not be concerning on its own, for law firms—who may or may not be sharing confidential client information with these models—this term represents a potential breach of client confidentiality requirements.

These examples are by no means intended to scare lawyers away from AI—rather, they represent some of the potential pitfalls of adopting AI technology that law firms must be aware of to effectively adopt AI while also upholding their professional duties and protecting clients.

In this blog post, we’ll explore some of the potential legal issues with AI technology—and what law firms can do to overcome them. Keep in mind that, at the end of the day, your jurisdiction’s rules of professional conduct will dictate whether—and how—you use AI technology and the suggestions below are meant to help lawyers navigate the muddy waters of AI adoption.

With that in mind, let’s look at some of the questions law firms should be asking themselves if they have adopted, or are planning to adopt, AI in their practices.

What does my bar association say about AI use?

For lawyers, your starting point should be your bar association’s rules of professional conduct along with ethics opinions that address AI use.

Several states have already released advisory AI ethics opinions outlining whether and how lawyers can use AI when practicing law. Unsurprisingly, AI ethics opinions like the one recently released by the Florida Bar prioritize maintaining client confidentiality, reviewing work products to ensure they are accurate and sufficient, avoiding unethical billing practices, and complying with lawyer advertising restrictions.

If your bar association hasn’t released an advisory AI ethics opinion, turn to other states who have—their opinions can help guide what you need to be looking out for when using AI. It’s also essential to review your bar association’s rules of professional conduct and consider how the applicable principles could apply to your use of AI. For example, broad directions on competence or maintaining client confidentiality will likely have a bearing on how your firm chooses to implement AI technology and what processes you’ll follow when using it.

Actions:

- Determine whether your jurisdiction’s bar association has released any ethics opinions relating to AI usage (and, if it has not, consult existing AI ethics opinions in other jurisdictions for insight).

- Review your bar association’s rules of professional conduct and consider how the applicable principles could apply to your use of AI.

What do my AI tool’s terms of service say?

Not all AI tools are built equally—and not all AI tools have the same terms of service. As noted in the Microsoft Azure example above, if your firm fails to thoroughly review the tool’s terms of service, you might be missing out on critical information about how your information is being used—running the risk of running afoul of client confidentiality requirements.

As a result, it’s essential for law firms to thoroughly vet AI solutions before using them. Do your research and, if appropriate, consult multiple models to ensure that your solution of choice aligns with your firm’s goals and does not create unneeded risk. For example, existing tools like Harvey AI and Clio’s forthcoming proprietary AI technology, Clio Duo, are designed specifically for law firms and operate on the principle of protecting sensitive legal data.

Actions:

- Before adopting AI technology, thoroughly vet the tool—including its terms of service—to determine whether the tool is appropriate for your law firm’s needs.

- Consider AI tools designed specifically for law firms, such as Harvey AI and Clio’s forthcoming proprietary AI technology, Clio Duo.

You may like these posts

What is my firm using AI for?

A secondary consideration when bringing AI into your law firm is simple: What do you plan to use AI technology for? Different AI models can serve different purposes—and come with different risks. Likewise, the purpose for which a law firm wants to use AI can create more or less risk for a law firm.

When we asked what lawyers were currently using AI for in the 2023 Legal Trends Report, legal research and drafting documents came out on top. However, our research also uncovered that many lawyers are interested in using AI to help with other document-oriented tasks, like finding and storing documents, getting documents signed, and drafting documents.

Here, we see some nuance in potential risk. For example, using AI for legal research (say, asking an AI model to provide case law that matches a particular set of facts (without exposing client information), or summarizing existing case law to provide the salient points) could be considered lower risk than, say, asking an AI model to store documents. In this sense, context matters—which is why it’s important for law firms to clearly outline their goals before adopting AI technology.

Actions:

- Consider what your law firm hopes to achieve with AI, including the specific tasks that your firm will use the AI tool for, and identify any associated risks that will need to be addressed.

Has my firm clearly outlined its stance on AI use?

Once your firm has clearly outlined goals relating to AI use, it’s equally important to ensure those goals are clearly articulated. This is where a law firm AI policy can help. By first determining whether and how your firm should be using AI, and then outlining those expectations in an AI policy, you can help ensure that your entire team is on the same page and minimize your risk of running into potential issues.

Actions:

- Develop an AI policy outlining which AI tools have been approved by your firm and how employees are expected to use the tools.

What do my employees need to know about AI use?

Creating an AI policy for your law firm is just one component of ensuring firm-wide responsible AI use. To help ensure your employees are on the same page, it’s also important to communicate your expectations. While an AI policy helps, continuing education is also important. Be sure to discuss your expectations with employees and implement training to ensure your employees know how to use the AI software responsibly. By offering ongoing education, such as lunch and learns or regular AI meetings where employees can discuss AI topics or ask and answer questions, your firm can help foster a sense of openness and collaboration amongst team members and learn from each other’s successes and challenges.

Actions:

- Educate employees on responsible AI usage, including their obligations under your firm’s AI policy.

- Offer ongoing education, such as lunch and learns or regular AI meetings, to encourage employees to discuss AI topics or ask and answer questions.

AI legal issues: our final thoughts

The enthusiastic adoption of AI in the legal industry presents endless opportunities for efficiency and innovation, but it also comes with significant legal considerations that law firms must address. As demonstrated by examples such as the potential breach of client confidentiality with AI service providers, law firms must navigate a complex landscape of ethical and professional responsibilities when integrating AI into their practices.

To overcome these challenges, law firms must thoroughly review their jurisdiction’s rules of professional conduct, seek guidance from advisory AI ethics opinions, and carefully vet any potential AI solutions. Clearly communicate your AI policies and provide ongoing education for employees to ensure that AI solutions are used responsibly firm-wide. By taking proactive steps to address these potential AI legal issues, law firms can harness the power of AI while upholding their commitment to ethical and responsible legal practice.

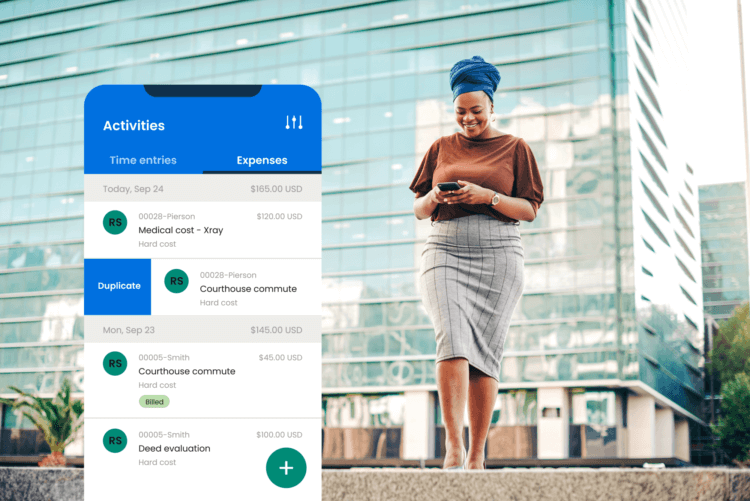

Consider, too, the role that legal-specific AI tools can play in ensuring that your law firm can responsibly adopt AI technology. For example, Clio Duo, our forthcoming proprietary AI technology, can help law firms harness the power of AI while protecting sensitive client data and adhering to the highest security standards.

We published this blog post in April 2024. Last updated: .

Categorized in: Technology

Clio Duo is Coming Soon

Meet Clio Duo, the AI-powered partner you've been waiting for. Be among the first to see it in action.

Notify me when Clio Duo is ready