On October 30, 2023, President Biden signed the Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence—described as a “landmark” AI Executive Order to manage the risks of artificial intelligence (AI) while cementing America’s place as a global AI leader.

But what does this Executive Order tell us about the U.S. government’s thoughts on AI? Below, we’ve outlined some of the directives from the AI Executive Order that may have a particularly significant impact on legal professionals, along with our thoughts on what this AI Executive Order suggests about the future use of AI in America.

The AI Executive Order at a glance

The AI Executive Order has far-reaching implications, ranging from America’s impact abroad to equity and inclusion. Below, we’ve highlighted some of the key takeaways which provide insights into the U.S. government’s vision for the future of AI.

AI safety and security

While AI’s capabilities have the potential to transform many facets of American life, the AI Executive Order also recognizes the implications for American safety and security. To mitigate the potential risks of AI systems, the AI Executive Order directs that:

- Developers of the most powerful AI systems must share safety test results and other critical information with the U.S. government.

- The National Institute of Standards and Technology will set guidelines to develop standards, tools, and tests to help ensure the safety and trustworthiness of AI systems.

- Standards and best practices must be established for authenticating “real” content while detecting AI-generated content to protect Americans from AI-enabled fraud.

- Advanced cybersecurity programs will be established to develop AI tools to find and fix vulnerabilities in critical software.

The AI Executive Order’s directions relating to safety and security are wide-ranging. Interestingly, these directions appear to cover two critical aspects of safety and security: Ensuring that AI use is done safely and securely while directing organizations to harness the potential of AI to increase safety and security in critical areas.

AI and privacy

The AI Executive Order includes a call to Congress to pass data privacy legislation to protect the public from the potential privacy risks posed by increased AI use. This section also creates several directives around prioritizing, strengthening, and evaluating privacy-preserving techniques, a directive that will require law firms to comply with stricter privacy standards and potentially demand reviews of how they collect, store, and use data via AI systems.

AI and equity

The AI Executive Order also pushes for advancing equity and civil rights, offering guidance to prevent AI algorithms from propagating discrimination. Legal firms employing AI for tasks like data analysis, prediction modeling, or even client interaction will need to be particularly attentive to ensuring their AI does not inadvertently perpetuate biases or discrimination, opening them up to potential lawsuits or legal challenges.

The AI Executive Order specifically mentions best practices concerning AI’s role in areas like sentencing, risk assessments, and crime forecasting. Law firms and legal professionals will need to be updated on these best practices, impacting how AI is employed within litigation, criminal defense, or prosecutorial contexts.

What the AI Executive Order tells us about attitudes towards AI

The AI Executive Order confirms that, from the U.S. government’s perspective, AI isn’t going away any time soon. Rather than suggesting that the U.S. government fears AI, the commitment to managing risks and shaping how AI will be used by the government as outlined in the AI Executive Order suggests that the U.S. government sees AI as an inevitable—and perhaps integral—part of our evolving society.

Does this presumed positive attitude towards AI advancement extend to the legal profession?

Lawyers and AI: the general consensus

According to the 2023 Legal Trends Report, lawyers, on average, are hesitant to wholly adopt AI technology. These feelings appear to be at odds with public—and, by extension, client—perceptions of AI. For example, clients are more likely than legal professionals to think that AI’s benefits outweigh the costs (32% vs. 19%), that the justice system could be improved with AI (32% vs. 19%), that courts should use AI (27% vs. 17%), and that AI improves the quality of legal services (32% vs. 21%).

Despite the hesitation among legal professionals to embrace AI, we know that 19% of lawyers are already using AI in their practices, while 51% want to use it in the future. Of those legal professionals, 71% who are interested in using AI in the future hope to do so within the next year.

You may like these posts

Final thoughts on the AI Executive Order

We know there has been ongoing interest in AI among the legal profession, and many lawyers have already found ways to incorporate AI tools into their practice.

The AI Executive Order therefore shouldn’t deter lawyers from incorporating AI tools into their practice; rather, it indicates that the U.S. government believes AI is worth investing in. Deploying AI ethically and responsibly has the potential to radically transform the legal profession—as the 2023 Legal Trends Report offered, “[AI] will enable those who embrace it—and those who embrace it will displace those who don’t.”

However, it’s also a great reminder that AI use does come with risk—and, without comprehensive regulations regarding AI use in place yet, it’s important to maintain a critical eye when using AI tools. We’re all too familiar with cautionary tales, like the New York lawyer who relied on an incorrect brief created by AI and the ethical concerns around AI use in the legal profession.

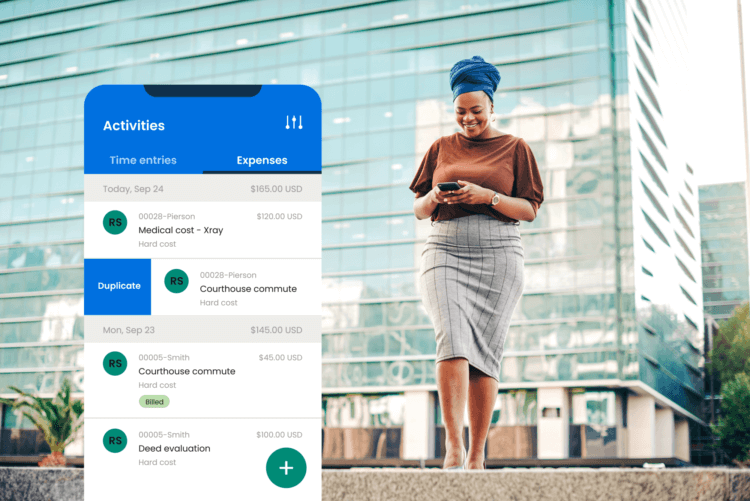

That’s why we’re doing everything we can to set our customers up for AI success with Clio Duo, our forthcoming AI functionality built on the robust foundation of our proprietary AI technology and platform-wide principle of protecting sensitive legal data. Clio Duo adheres to the highest security, compliance, and privacy standards held throughout Clio’s entire operating system. When available to the market, Clio Duo’s audit log functionality will offer tracking of all AI activity to be logged and discoverable—a foundational aspect of responsible and sustainable legal AI practice spearheaded by Clio.

We can’t wait to introduce you to Clio Duo. For the time being, be sure to sign up for one of Clio’s AI webinars to help you understand the risks and opportunities of using AI in your practice.

We published this blog post in November 2023. Last updated: .

Categorized in: Technology, Uncategorized